FLOE Metadata Authoring Design - Early v2

This design document is for reference only. For the current designs, see the FLOE Metadata Authoring Design document.

Early Iteration 2 - Accessibility Metadata with Modality Relationships

Summary:

- As each piece of content is uploaded, sensible default metadata is automatically generated for it.

- user adjusts metadata as needed

- As work progresses, a graphical indicator shows the user the different modalities and accessibility features they have covered.

- As content is added in different modalities (like captions), the relevant "hasAdaptation" metadata is generated for the primary video content, and "isAdaptationOf" metadata is generated for the alternative.

- Using badges in the sidebar, the user is encouraged to be aware of other modalities and consider adding them

- descriptive words (i.e. semantic tags) are also generated based on the content type

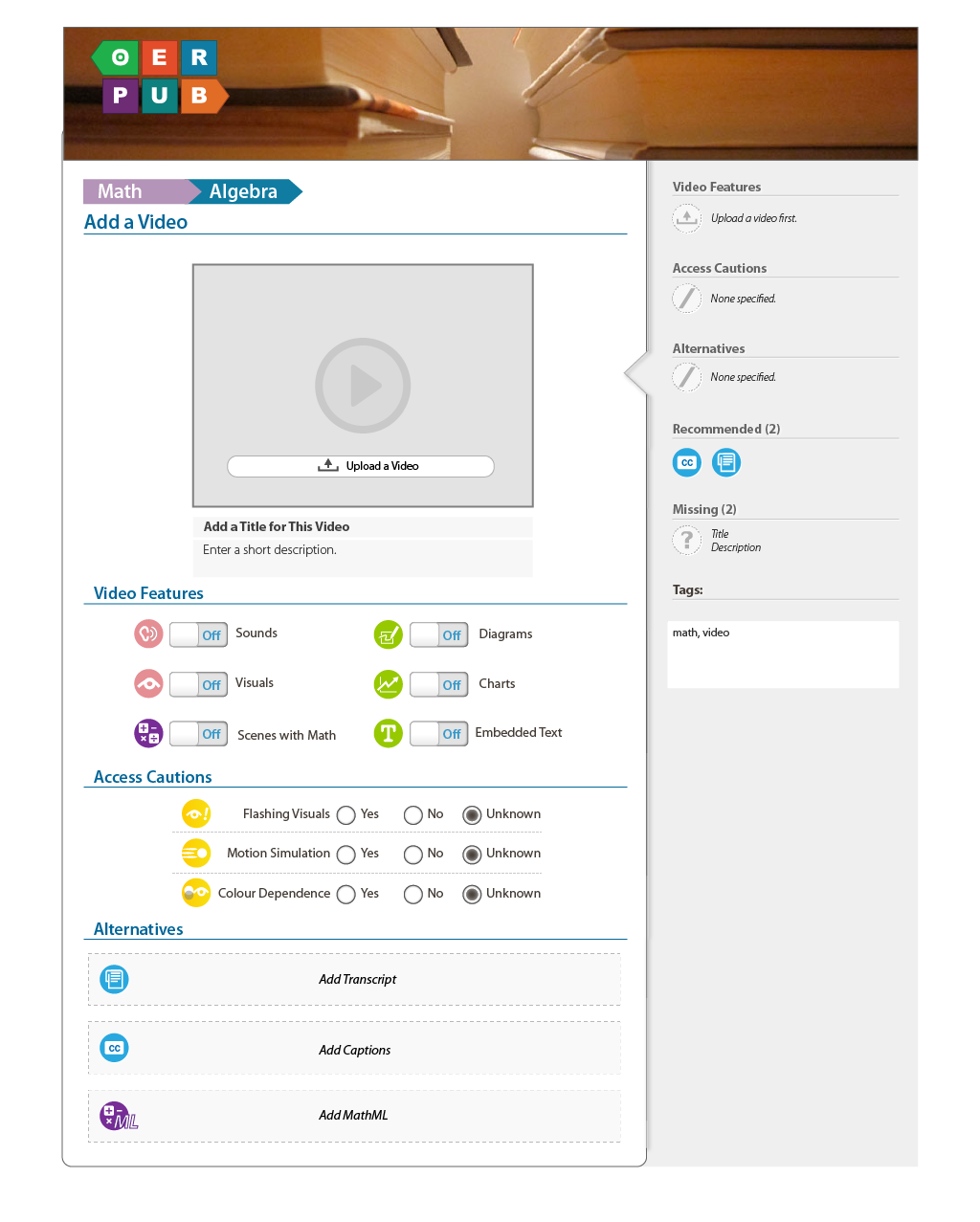

Above: An author intends to create a learning resource for Algebra based on a video. In the (hypothetical) learning repository, the author had selected the proper subject ("Math" and "Algebra") and selected the "Add Video" option (not pictured). Above is the default interface the author sees when creating a video resource for Math.

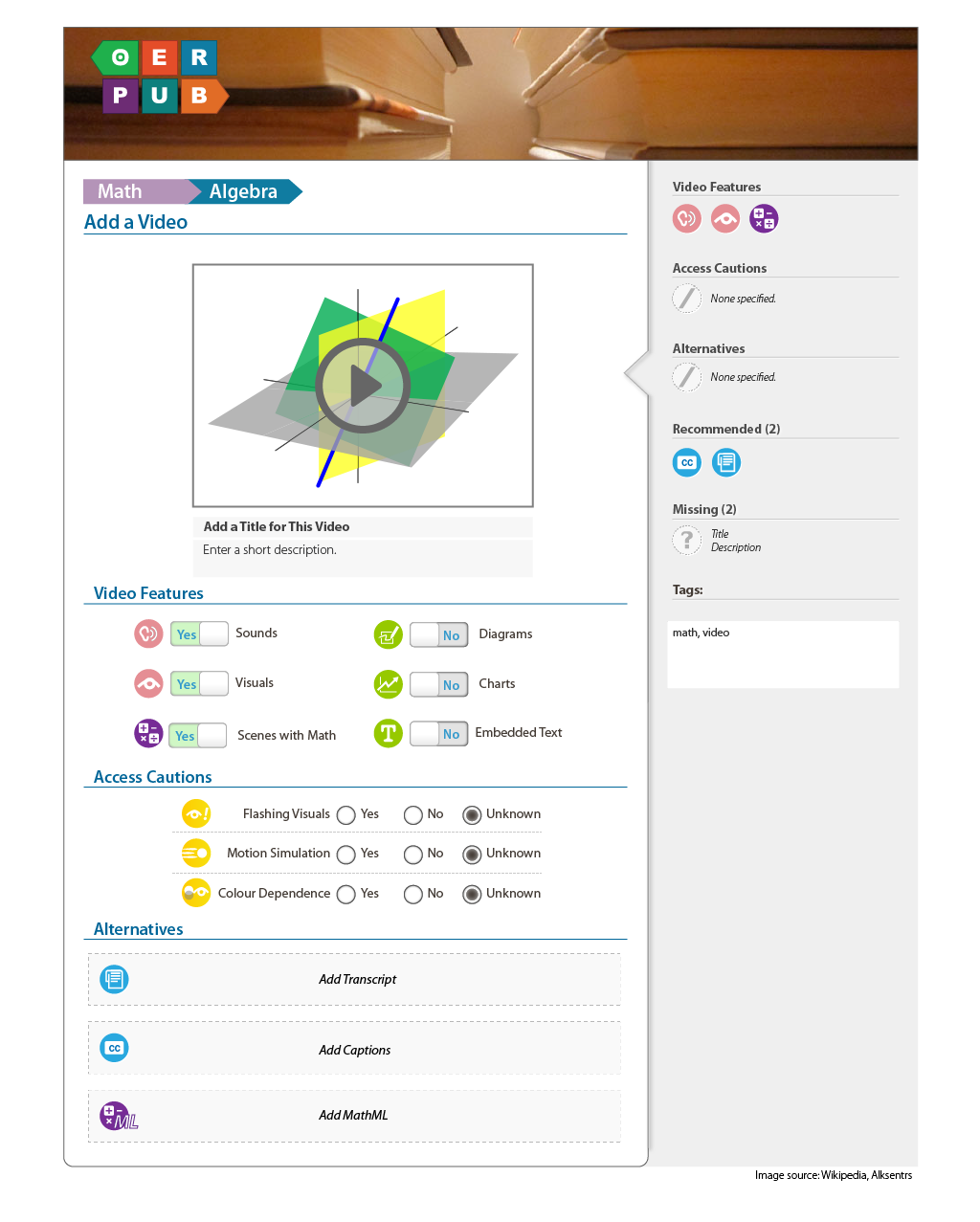

The image above shows the interface after the user has uploaded a video. The system assumes that since the content is video, there will be visuals and audio - thus the corresponding toggles become selected. Since the content is in the Math category, "Scenes with Math" is also enabled. In the background metadata is generated based on these selections.

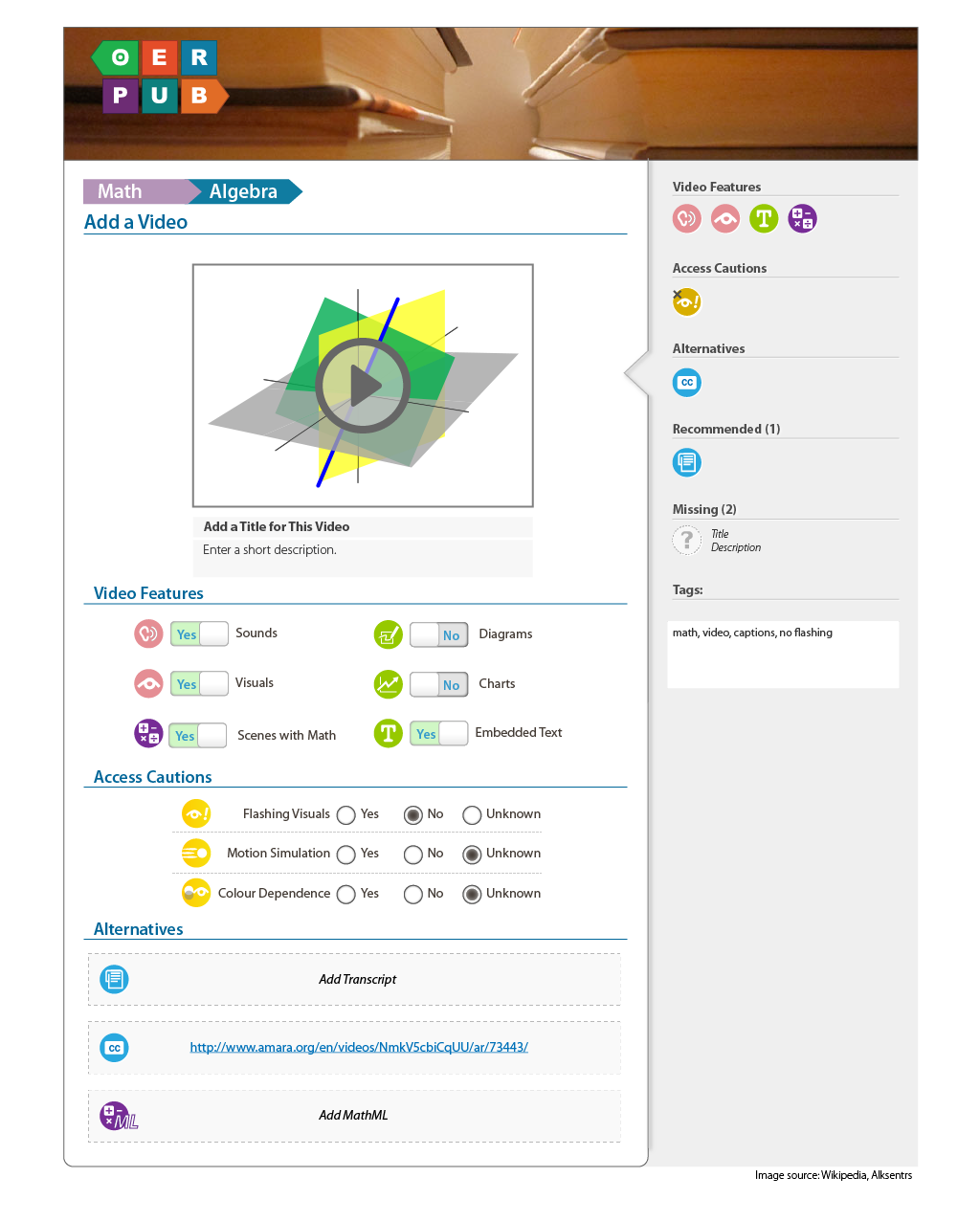

The user has now refined their options and added some captions. As the user makes these changes, the badges in the sidebar update to show what the user has accomplished so far. Based on their settings, semantic words (or tags) are also added for their convenience.

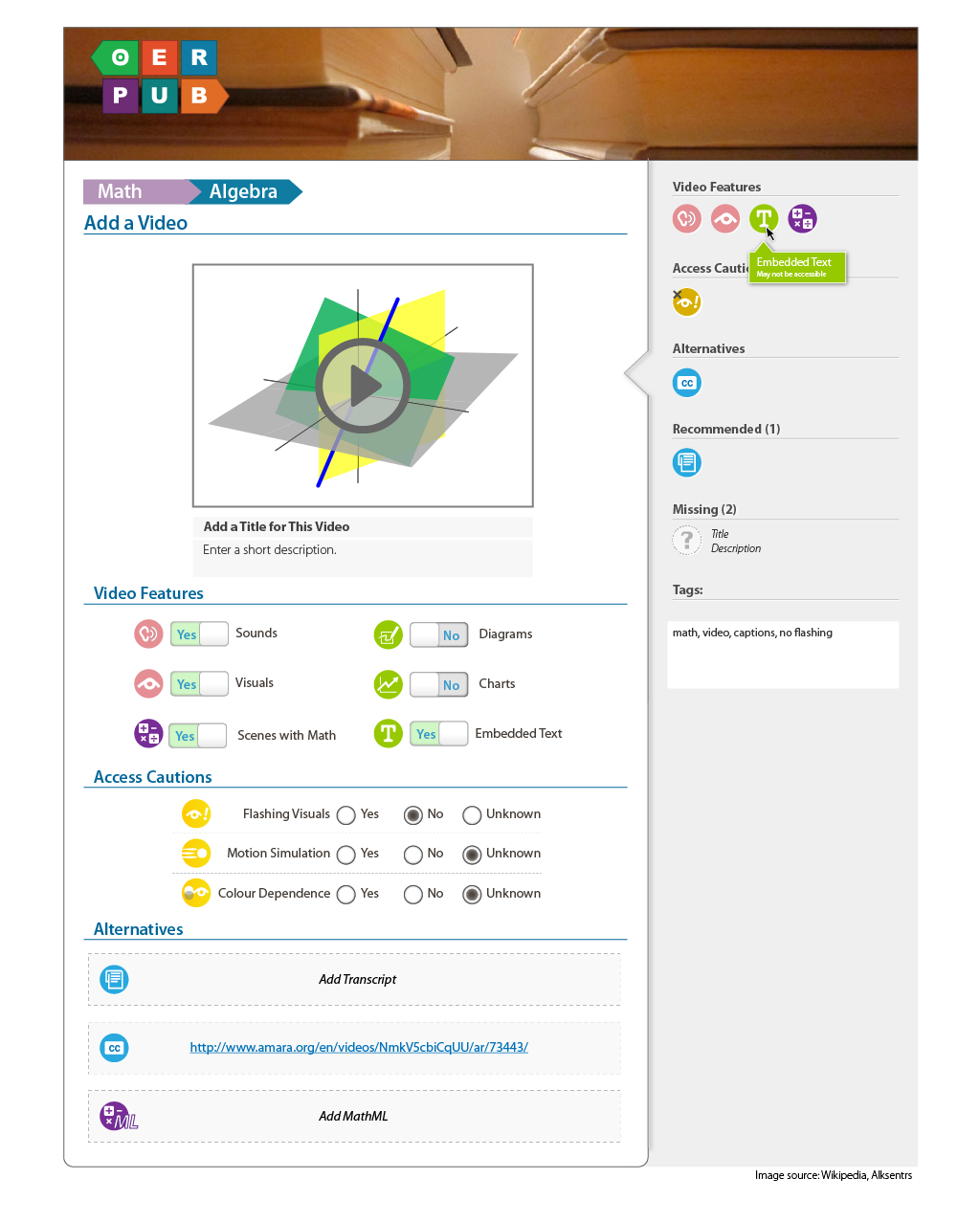

Hovering over the icons in the sidebar will give a short explanation or description of the icon's meaning.